How creators can reach Japan, Thailand, Spain, and beyond with next-gen ADR technology

The Global Video Game Has Changed

Once, if you wanted your videos seen in Japan, Thailand, or Spanish-speaking countries, you either had to rely on English captions or pay for full human translation and dubbing. Both routes had limits: captions don’t help you appear in local search results, and traditional dubbing is costly and slow.

Today, AI-driven dubbing and ADR-style voice tech make it possible to sound like yourself in another language — synced to your lips — without hiring a full post-production team. And yes, the quality has reached the point where it’s not just good enough for YouTube… it’s creeping into Netflix territory.

YouTube Is Still King (in Most Countries)

Before diving into AI dubbing tools, here’s where YouTube stands globally:

- Japan → YouTube dominates alongside local favorite Niconico (bullet-comment anime & gaming culture).

- Thailand → YouTube + Facebook Live + TikTok are equally strong, with Facebook leading in live selling and community content.

- Spanish-speaking markets → YouTube is a powerhouse, often rivaling TV in daily viewing hours.

In all three regions, localized titles, descriptions, tags, and thumbnails make or break whether your video even gets seen. You can just rely on captions, but that won’t help much with local search or click-through rates.

Why Captions Alone Aren’t Enough

YouTube’s auto-translate feature is great for accessibility, but:

- It doesn’t translate your title, tags, or thumbnail text.

- Machine translation often misses slang, nuance, and emotion.

- Captions won’t boost search discoverability in a foreign language — only localized metadata will.

If you want real reach, you need audio in their language, ideally lip-synced and tone-matched to your original performance.

Enter AI Dubbing with ADR Sync

Here are the top AI dubbing platforms that balance speed, cost, and quality — with a focus on ADR-level sync and emotional realism.

| Platform | Lip-Sync | Voice Cloning | Emotion Retention | Editing Control | Best For |

|---|---|---|---|---|---|

| HeyGen | ✅ | ✅ | ✅ | Limited | Fast, natural lip-sync for YouTube & social. |

| Rask.ai | ✅ | ✅ | ✅ | ✅ | Long-form content, documentaries, multi-hour dubs. |

| ElevenLabs Dubbing Studio | ❌ | ✅ | ✅ | ✅ | Perfect audio emotion; sync in post. |

| Papercup | ✅ | ⚠️ | ✅ | ✅ | Broadcast-quality ADR for streaming. |

| DeepDub | ✅ | ✅ | ✅ | ✅ | Film-level localization for narrative projects. |

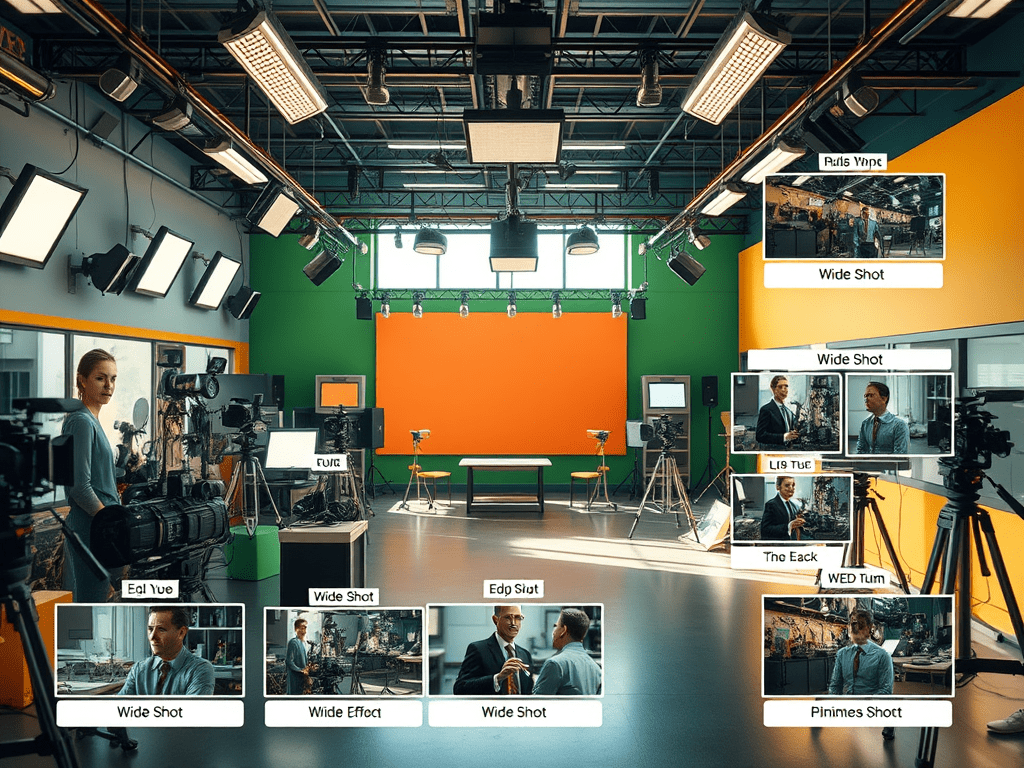

Workflow for Creators

Whether you’re pushing content for YouTube or producing indie film:

- Upload Original Footage → AI handles translation & voice generation.

- Select Language & Voice → Clone your own voice or pick a close match.

- Generate Dub → Review lip match, pacing, and tone.

- Export Stems → Keep dialogue separate from music/effects.

- ADR Polish in Post → Fine-tune timing, breaths, and ambience for realism.

Why This Matters for Hypersonic Creators

For creators like those in the Deadline Hypersonic universe, the power here isn’t just in being understood. It’s in delivering the same cinematic vibe in multiple languages without losing your unique style.

Imagine guerrilla-shot street scenes in Humboldt playing just as naturally in Japanese as they do in English — no awkward timing, no robotic voices. Just you, speaking fluently in a language you never studied, synced to your lips as if you had.

That’s not just translation. That’s cultural infiltration at light speed.

Next Steps

- If you’re aiming for YouTube reach, start with HeyGen or Rask.ai for balance between speed and quality.

- For film or series ADR, look at Papercup or DeepDub for human-assisted, near-perfect results.

- Always pair dubbing with localized titles, descriptions, and thumbnails for maximum discoverability.

Bottom line: The tools are here. The audience is global. The question is… do you want them to just read your story — or hear it in their own voice?

Leave a comment